GPU on Leverage: GPU Cloud economics explained

Navigating the battleground of gpu compute providers.

It is a battle worth fighting for those who can weather GPU demand volatility and optimize flops/dollar. We will see at least 3 $10B+ GPU-specific Clouds built this decade. Here’s why.

Setting the Stage

Considered one of the most lucrative businesses in 2023, GPU-specific Clouds kept making headlines. But will the thirst for GPU compute continue, and are we on track to the highly anticipated Trillion-Dollar Cluster?

Innovation cycles always split opinion. And whether the compute gamble has paid off is AI’s biggest question right now.

What’s not disputed, however, is that the capital investment going into training and serving models has been massive, and likely won’t slow down even if model benchmarks do. The quest for compute has been a seemingly unstoppable force.

GPU-specific Clouds, providing compute for training and using AI models, have been the ultimate shovel of the AI Gold Rush. At the same time, they have not been attractive to venture investors since they are capital intensive businesses. Some have even likened them to WeWork, rental-arbitrageurs bound to the market - supply/demand - dynamics of a particular asset, the GPU.

GPU Clouds sell GPU-hours, so their success and margins are indeed related to GPU chip supply dynamics. Nonetheless, it is a massive market with potential winners and we must understand several aspects of GPU Clouds and their infrastructure in order to predict how the landscape will evolve. Through an analysis of AI model development, LLM training runs and economic models of GPU Clouds, we develop an intuition for what the future looks like.

It’s one of hyperscaler domination, sustained demand for compute and a few breakthrough multi billion dollar vertically integrated players.

Traditional Cloud Computing

First let’s take a look at traditional cloud computing and see what insights we can derive from this $600B market to inform us about GPU Clouds.

Cloud services are high capex, lower opex businesses with economies of scale, leading to a market dominated by just a few players. Margins have historically been lower due to commoditization and high infrastructure costs. Gross margins have increased over past years to around 55% though, because of ancillary services that are higher margin (security, storage etc).

Outside of the hyperscalers, there are still a few companies with several billion in revenue. Some of them have slightly different value propositions to the large providers, others have a few large contracts that don’t churn because cloud services are largely undifferentiated.

For example Digital Ocean ($4B cap, 55% gross margins, 20% CAGR to $750M ARR) is an offering with a focus on smaller clients and less setup overhead compared to hyperscalers. Rackspace (PE-owned) generates several billion dollars in revenue servicing Apollo’s portfolio. Cisco has a Cloud offering with specialized security services, and makes up around 20% of the $200B company.

The traditional cloud computing market is an oligopoly, with a few other players finding their niche in a big market. This state of the world is a good benchmark for the traditional+GPU Cloud market of the future. Later we will consider what may be the equivalent ‘higher margin ancillary services’ in AI infrastructure.

GPU Clouds vs Hyperscalers. A Whole New Game?

Are GPU Clouds Different from Traditional Clouds? Yes and No.

Both forms of cloud computing services are somewhat commoditized. Service providers compete on cost, availability, scale and support. Both clouds lend themselves to being outsourced services - even the largest companies like Microsoft outsource parts if they can.

Since they are relatively undifferentiated, there is rarely reason to churn. This is good for those players who get clients and can scale them (like Coreweave with OpenAI). This has been true in traditional cloud services as well.

There is an interesting difference in demand for traditional vs GPU Clouds. The distribution in demand is much more narrow, (spend is concentrated into 50 companies instead of 5,000) for purpose-built, large-scale GPU accelerated workloads. The dynamics are also different on a temporal basis. There are bulky periods of demand as LLMs are trained (or as models experience high inference volumes). Both are expensive.

There are also larger secular trends at play as markets react to AI.

GPU Clouds have to weather the implicit volatility of demand. It’s important for these businesses to find natural hedges or other ways to smooth over these demand curves in a way traditional clouds never had to.

What could a downturn look like? Do we ever reach a steady state of compute demand?

These are tricky questions to answer but to most market participants, a downturn seems a long way off. Scaling laws dictate that the current case of compute expansion could hold for a while as long as customers and providers are not capital constrained.

Mitigating unaccounted for liabilities is vital - this is why getting larger, long term contracts is a better strategy than on-demand. And possibly the only strategy that will survive fluctuations in GPU demand over the coming years.

On the technical front, GPU clusters are very different to CPU nodes. They fail much more frequently, and require 4x power - GPU DCs (Data Centre) are designed very differently to CPU.

Wires, so many wires.

The complexities of these DCs will also increase as they continue to scale up - managing this complexity has become a secret sauce for GPU Clouds.

Vertically integrated players will have a massive advantage in optimizing performance with tighter hardware-software integrations, their ability to customize clusters and control cost levels. I would go so far as to contend that only vertically integrated players will win in the long run.

When it comes to software, GPU Clouds have different requirements and therefore emphasize specific parts of their stack.

Networking and bandwidth are vital components. Training runs have more dependencies and therefore different requirements when it comes to granular resource provisioning and reloading. Frameworks to help optimize flops/dollar, like auto-resumption (with checkpointing), run parallelization and other customized cost saving methods are table stakes.

The difficulty of running large clusters increases as you scale up. Sophisticated software needs to be built by GPU Clouds to optimize MFU (model FLOPs utilization), and this software will become an essential weapon on the battlefield.

There could be other similar higher margin products unknown to the market today which arise and become key differentiators for GPU Clouds.

The Next Frontier: AI’s Insatiable Thirst

GPU Cloud businesses are fundamentally a levered bet on demand for GPU compute. So, let’s evaluate what demand will look like.

The quest for compute has been a seemingly unstoppable force; GPU efficiency gains, supply chain miracles, power unlocks…. it took only 122 days for xAI to bring together a 100k liquid-cooled cluster, the largest in the world. It’s difficult to see the next decade come and go without this compute race continuing.

To more precisely forecast long-term GPU demand, we need some perspective on how big foundation models get, and how many models will win. For now, let’s assume that scaling laws hold (that is, more compute = more intelligence).

On models: it’s likely that the landscape of models evolves into a state that is not so dissimilar from today. Only extremely well funded companies join the race. In this state of the world, there are likely several large generic model providers per continent….let’s say 20-30 globally. A few specific domains (Software Development, Healthcare, Robotics etc) will have 1-2 winners each.

There would then be 50 meaningful AI companies running and training AI models globally. Naturally, demand will accumulate upwards and create an inverted pyramid.

How model development progresses is unknown, but it is not out of the question that these 50 companies will require exponentially more compute (GPT-4 cost in excess of $100MM to train).

Technological progress usually follows S-curves, but compute may be one of the few that holds as a true exponential.

Of course, there’s finetuning, inference (even inference-time compute has become a hit)… there could be lags creating ever-evolving internal demand curves. Model companies will support inference for their models; inference may even outgrow training in terms of compute dollars upon certain model benchmarks.

The largest foundation model companies are already on the cusp of spending $1B/year on compute. In the next few years, this will easily expand to hundreds of billions of dollars in training runs in aggregate across ~50 companies per year. This would not be incomparable to previous quests of national importance. That would make AI compute bigger than the traditional cloud market.

And ultimately GPU Clouds will aggregate demand across all compute forms, chips (even non-Nvidia), and across these 50 companies.

Even with a much more conservative outlook, we will still see several companies (just like in traditional cloud) capture non-trivial market share. Currently, implied AI capex spend (chips, DC infrastructure) is $300B, and amortized is nearing $100B annually as we head into 2025 (as indicated in AI’s $600B Question).

Let’s take a hypothetical GPU Cloud company valued similarly to a traditional one, like Digital Ocean, with Enterprise Value at ~$4B and EBITDA at ~$200M yielding EV/EBITDA of 20x. Assuming compute demand levels of today ($100B) with a modest 10% growth, and hyperscalers taking even 75% market share over the next 5 years, we’re able to see:

$40B in annual revenue available for GPU Clouds only

$1-2B in obtainable EBITDA

At 20x ebitda multiples, there is room for 3-4 $10B GPU-specific Cloud companies to be built.

Model development through massive training runs is clearly becoming a matter of national security. As governments become more involved in GPU Clouds, these numbers could go up a lot. I encourage readers to read Situational Awareness for more on this…

The Nitty Gritty: GPU Cloud’s Business Model

We’re going to now focus on the economics of GPU Clouds who own hardware via debt financing, like Coreweave and Fluidstack (Private Cloud). This is because most large compute deals are being made with GPU owners rather than renters.

Let’s look at the typical cost structure:

Hardware: chips

Operational; physical security, maintenance, compliance, security, installation, tech support

Software: monitoring, software infrastructure, security, networking ingress, egress traffic, Infiniband

DC space: buy or lease

Power/Energy

Data storage: SSDs, HDDs, backups, file systems

Cooling

The largest COGS are energy (30%), hardware (25%), software/data/networking (20%).

Assuming a 3 year lifespan and a $30k H100 machine, a unit economic breakdown looks as follows for an H100 costing $2.50/hr:

- $0.1/hr of electricity cost

- $0.3/hr of DC operational cost

- $0.1/hr of software/networking etc

- $1.14/hr of deprecation

This yields (2.5-0.1-0.3-0.1-1.14)= ~$0.86 gross profit/hr (which is ~34% gross margin).

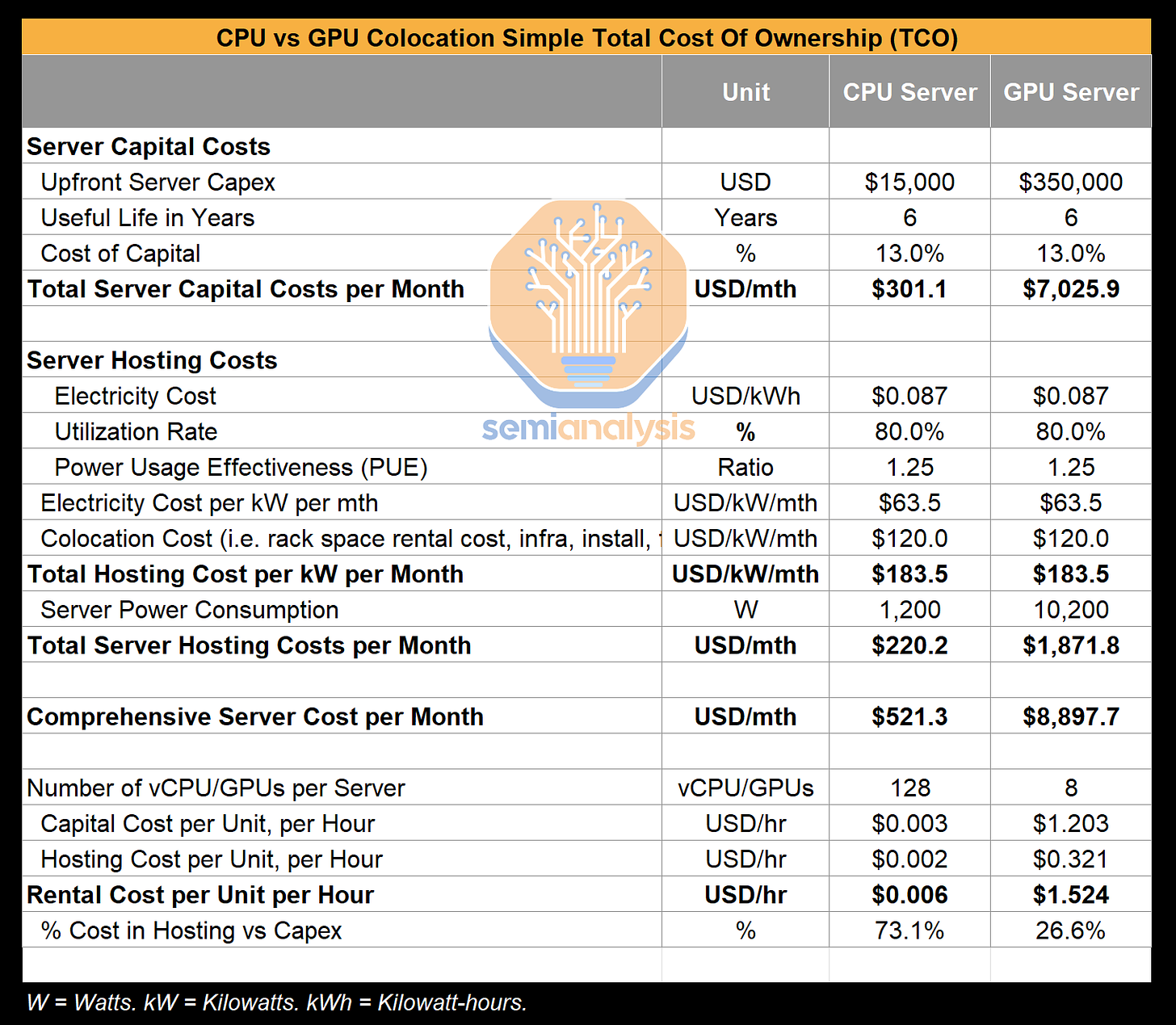

From Semianalysis:

One interesting take away is that the TCO (total cost of ownership) for running GPUs is heavily weighted towards chips over hosting costs. The oligopolistic effects in GPU Cloud are therefore more a function of cost of capital than economies of scale. The marginal effect being the market is less susceptible to natural monopoly. This means that new GPU Clouds players like Coreweave, Fluidstack and Lambda now have a real shot at taking a significant share of this market.

As seen in the model above, the important parameters are the overall COGS, asset lifetime and deprecation rate, payback period and utilization. So how are these taken into account to price compute contracts?

From Contract to ROI

Put simply, for every $1 spent on Nvidia chips, the GPU Cloud provider generates $N over some time period, usually 3-4 years. Instead of charging customers based on a cost-plus pricing (operating the chips per hour plus margin), contracts are priced according to the total asset lifetime. This means GPU Clouds can find efficiencies and lower the cost of delivery to their own benefit.

Specifically, GPU Cloud providers estimate their ability to pay down debt according to their costs. This yields some payback period; a buffer is added for some variability and net payment terms are given to their customers. The contract length needs to be longer than the payback period to make income. This is how ROI is calculated.

At the end of the contract, GPU Clouds own the depreciated asset and are making pure income after COGS. GPU Clouds are effectively taking on the risk of utilization and GPU lifespan so AI companies don’t have to.

This tells us that in the long run, GPU Clouds will likely be valued as a function of net income with some variability premium from differentiated software and/or customers. Until then, it’ll be a function of perceived forward compute demand.

Winner Winner GPU Dinner

What does all of this tell us about which GPU-accelerated Cloud companies may win?

They should be vertically integrated (own and operate GPUs/DCs) to be able to handle the complexities of both hardware and software as clusters scale up in size and sell higher margin services.

They focus on servicing the future AI winners (and not the long tail). The amount foundational model companies like OpenAI will spend on compute will be orders of magnitude larger than those not competing for AGI - even Fortune 500s.

They find elegant ways of weathering demand volatility storms, with high sustained utilization and natural hedges.

They have a path towards lowering their cost of capital.

They focus on availability and scale first then streamlining cost efficiencies later.

They need to build and serve sophisticated software frameworks to optimize MFU. These value-added services need to i) speed up training runs (parallelization, dynamic workload re-allocation, data ingress/egress) and ii) optimize costs (better observability, auto-rebooting to save cycles) since high utilization is table stakes.

The End. (State)

GPU Clouds are services competing on scale, price, availability and service (software/support). We already touched on some important characteristics of the potential winners, and ways we might value GPU Clouds.

The incumbents in this market (hyperscalers) have an advantage with their low cost of capital, physical infrastructure and ability to scale capacity. Hyperscalers have and will maintain a large chunk of market share.

Nonetheless, new providers will scale up and fight to focus on important characteristics like the six listed above. There will be enough space carved out in this new market for the 3-4 best GPU Clouds to become multi-billion dollar companies.

They’ll have fluctuating margins and profitability for a while to come. In the interim, their valuations will be a function of perceived net demand and their ability to win large contracts. In the long run, they will be valued according to net income, and their ability to stay nimble and build software to maintain their place in this big and growing market.

The next few years will be a wild time for those participating.

Thanks to Teo Leibowitz, Adam Mizrahi, Mark Haghani, Philipe Legault, for their thoughts and feedback on this article.

interesting post! it's notable how little electricity $ counts overall (even with zero-cost electricity)

the 10-20 year capex will be quite different than the model given application-specific chips coming to market in next few years where educated guesses are 100x more powerful at 10x the cost

also, many interesting data points are lacking source attribution